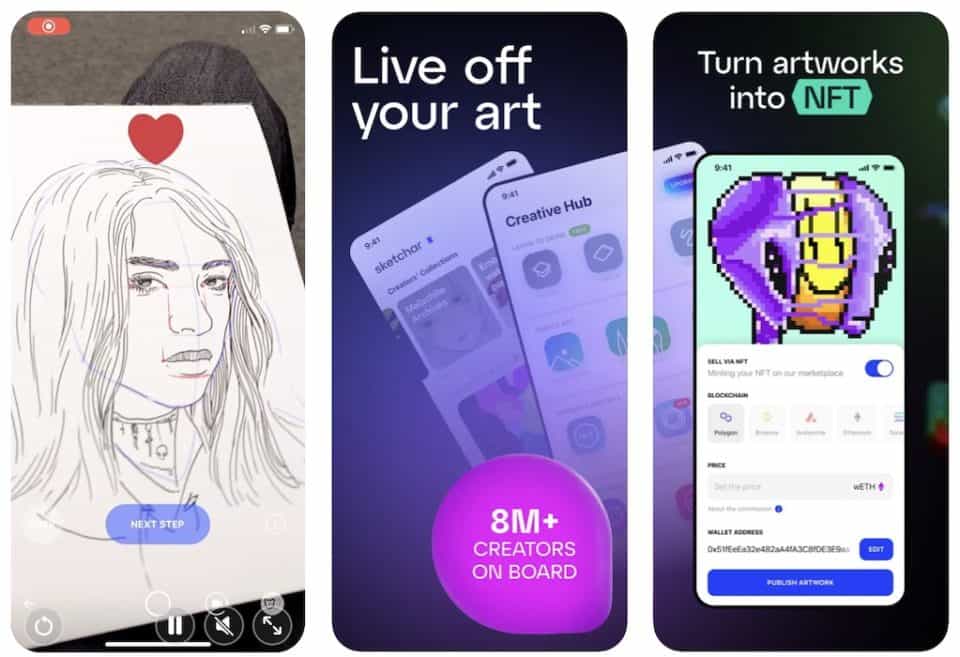

AR Sketchbooks for Creative Artists invite creators into an innovative fusion of traditional art and cutting-edge technology, where imagination flourishes through augmented reality. This transformative tool not only enhances the artistic experience by allowing artists to visualize their creations in immersive environments but also bridges the gap between physical and digital realms. As artists engage with these sketchbooks, they can explore new dimensions of creativity, making art more interactive and engaging.

The integration of augmented reality into sketchbooks offers a myriad of possibilities, from interactive features that allow for real-time collaboration to tools that help artists refine their techniques. As the art world evolves, embracing such technology is essential for artists looking to stand out in a competitive landscape. This synthesis of art and technology is becoming a game changer, enabling artists to expand their horizons and redefine their creative processes.

The Evolution of Artificial Intelligence: A Comprehensive OverviewArtificial Intelligence (AI) has rapidly evolved over the past several decades, transforming from a concept rooted in science fiction to a significant facet of modern technology. The journey of AI is marked by substantial achievements, setbacks, and the continuous quest for creating machines that can mimic human intelligence. This article delves into the history, current developments, and future prospects of artificial intelligence, highlighting its implications for various sectors.The concept of AI dates back to ancient mythology and philosophy, but the formal foundation was laid in the mid-20th century.

The term “artificial intelligence” was coined in 1956 during the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This pivotal event brought together researchers who shared the vision of creating machines that could think and learn like humans. Early AI research focused on symbolic reasoning, problem-solving, and the development of algorithms that could perform specific tasks.One of the first significant milestones in AI development occurred in the 1960s with the creation of the first AI programming languages, such as LISP and Prolog.

These languages facilitated the development of AI applications, including natural language processing and automated theorem proving. During this period, researchers developed early AI systems that could play games like chess, demonstrating the potential of machines to execute complex tasks.Despite early optimism, the field of AI faced several challenges, leading to periods of reduced funding and interest known as “AI winters.” These downturns were primarily due to the limitations of algorithms, hardware, and unrealistic expectations from the public and funding agencies.

However, the 1980s saw a resurgence in AI research, largely driven by the success of expert systems—computer programs that emulate the decision-making ability of a human expert in a specific domain. Organizations began to implement these systems in fields like medicine, finance, and manufacturing.The late 1990s and early 2000s marked a turning point in AI’s trajectory with the advent of machine learning (ML) and data-driven approaches.

Researchers shifted their focus from rule-based systems to statistical methods, enabling machines to learn from data rather than relying solely on pre-programmed rules. This shift was made possible by the increasing availability of vast amounts of data and the exponential growth of computational power. Consequently, AI systems began to demonstrate remarkable capabilities, particularly in image and speech recognition.The landmark achievement of IBM’s Deep Blue in 1997, which defeated world chess champion Garry Kasparov, epitomized the newfound potential of AI.

This victory sparked greater interest in AI research and led to significant investment in developing advanced algorithms. Following this, Google’s investment in deep learning and neural networks in the early 2010s further transformed the landscape. Deep learning, characterized by its use of artificial neural networks, has enabled breakthroughs in various applications, including image classification, natural language processing, and autonomous systems.Today, AI is embedded in numerous aspects of daily life, from virtual assistants like Siri and Alexa to recommendation systems used in streaming services and e-commerce platforms.

Machine learning algorithms analyze user behavior, optimizing experiences and enhancing customer engagement. Moreover, AI technologies have found applications in healthcare, where predictive analytics can assist in disease diagnosis and treatment planning, and in finance, where algorithms power high-frequency trading and fraud detection.The ongoing advancements in AI raise important ethical and societal questions. Concerns regarding job displacement due to automation, privacy issues related to data usage, and the potential for biased algorithms highlight the need for responsible AI development.

Researchers and policymakers are increasingly advocating for frameworks that ensure transparency, accountability, and fairness in AI systems. The establishment of ethical guidelines and regulatory measures is essential to mitigate risks while harnessing the benefits of AI.As we look towards the future, the prospects for AI are both exciting and challenging. The emergence of technologies such as generative adversarial networks (GANs) and reinforcement learning offers new avenues for exploration.

GANs, for instance, enable the generation of realistic images, videos, and other media, raising questions about authenticity and copyright. Reinforcement learning, on the other hand, allows systems to learn optimal actions through trial and error, leading to breakthroughs in robotics and autonomous vehicles.Moreover, the integration of AI with other emerging technologies, such as the Internet of Things (IoT) and blockchain, could revolutionize industries by enabling smarter decision-making and increased efficiency.

For example, AI-driven analytics can enhance predictive maintenance in manufacturing, while blockchain technology can ensure data integrity and security in AI applications.In conclusion, the evolution of artificial intelligence has been characterized by significant milestones, transformative technologies, and a growing recognition of its societal implications. As AI continues to advance, it is imperative for researchers, industry leaders, and policymakers to collaborate in shaping a future where AI serves as a tool for positive change.

By addressing ethical considerations and focusing on responsible development, we can harness the full potential of AI while ensuring that it benefits all of humanity.The exploration of artificial intelligence is far from complete; it remains a dynamic and rapidly evolving field. Continued investment in research, education, and interdisciplinary collaboration will be essential in guiding AI’s trajectory, ultimately determining how it can be integrated into society to enhance human capabilities and address pressing global challenges.